Brief

Background and My Contribution

The thesis was independently developed for the AVATAR project. AVATAR is an Erasmus+ project to support and facilitate the adoption of VR/AR technologies in engineering curricula.

My personal contribution to this project was creating digital human model assets, creating 3D assets for the VR environment, researching hand tracking in VR (Oculus SDK), Create a VR hand tracking demo in Unity3D for my thesis.

The Context

Collaborative robot (Cobot) will be massively used to improve the efficiency of the manufacturing process, and more and more scholars are focusing on exploring the future human-robot interaction (HRI).

In some cutting-edge research about HRI, many studies showed the advantages of studying human-robot interaction in virtual reality. At the same time, digital human technology is being used to explore the relationship between human-robot interaction too.

The current virtual technologies allow us to reconstruct a near-real robot in a virtual environment. But in this thesis, the work only focused on the simulation of the digital human.

The Objective

Therefore, to better investigate human-robot interaction (HRI), it is essential to develop a VR-based ergonomic test system that realizes a certain degree of simulation/analysis of the manufacturing activities carried by the human worker.

To achieve the final objective, three development activities were executed: 1. Create VR scene 2. Create digital human model 3. Simulate digital human movements

Create VR Scene

How to create models for the VR scene?

Before creating the VR scene. It’s essential to choose the right development platform. From detailed research, here I choose Oculus as the hardware platform. It’s a standalone VR headset. Support hand tracking, And with a comprehensive SDK. Then, Unity is the software development platform. It’s the most popular game engine, supported by Oculus, and it is free.

As we know, Models are the basic blocks that consist of a VR scene. So, How to create models for the VR scene?

Well, the first thing to do is summarise all the models. Here is a simple manufacturing process. In the first step, the worker place the metal component in the pallet, the second step the worker will install the pallet to the machining center, the third step, the machining center will start manufacturing, the last step, the worker need to remove the finished component. Therefore, at least we need these models: a metal component, a pallet, a machining center, and the finished component.

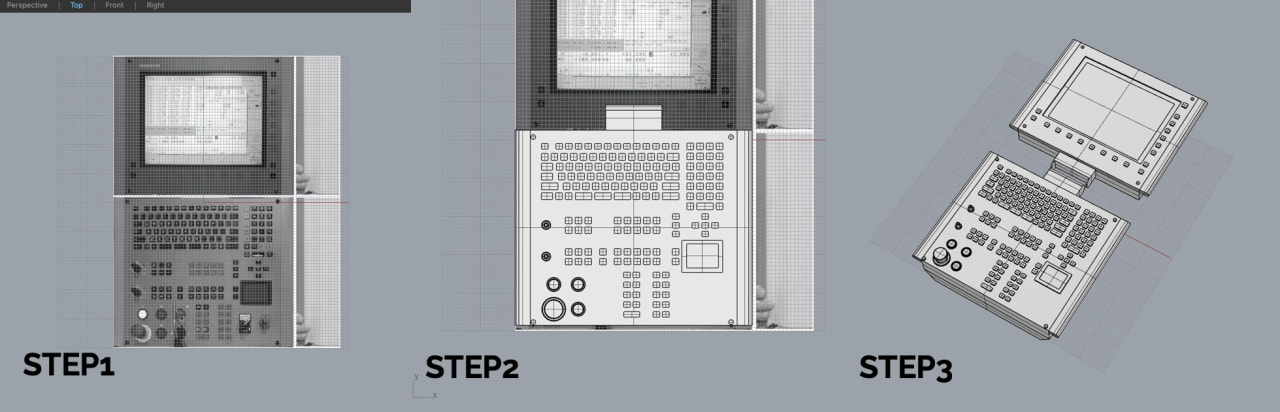

Then we can start to create models, here I used Rhino, created a model with a reference image. Even the model is created it’s not looking realistic, it is because the model does not have a texture. Texturing is basically like painting in the model, we can use tools like Quixel Mixer to texture the model.

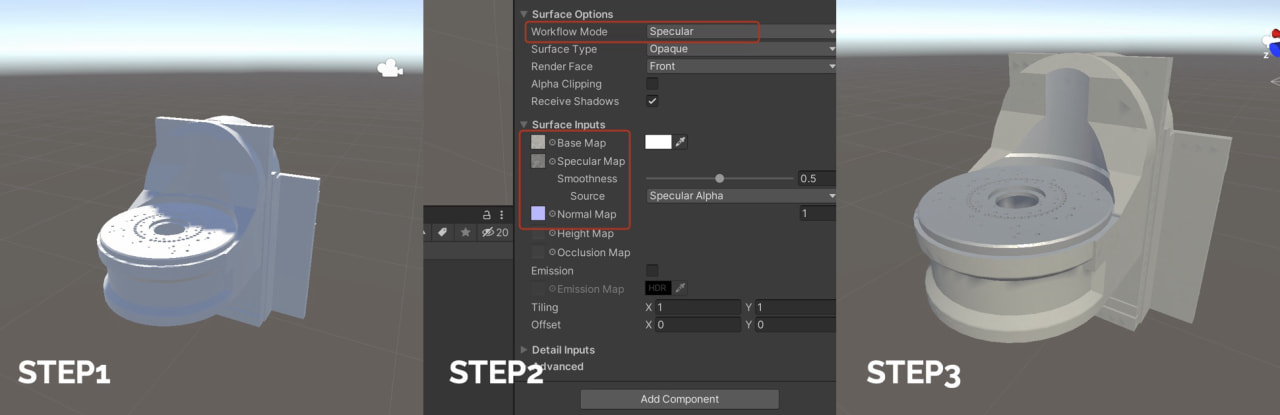

When the model and texture are ready, we can import them to our developing engine, which is Unity. In the first step, we need to assign material to the model, then we have to link our textures to the target material properly, and step 3 is how the final model is displayed in unity.

When we finished all the models, we can adjust the model’s position inside Unity, and here is what the final VR scene looks like. But until now the VR environment is still static, not interactive.

How to make the VR scene interactive?

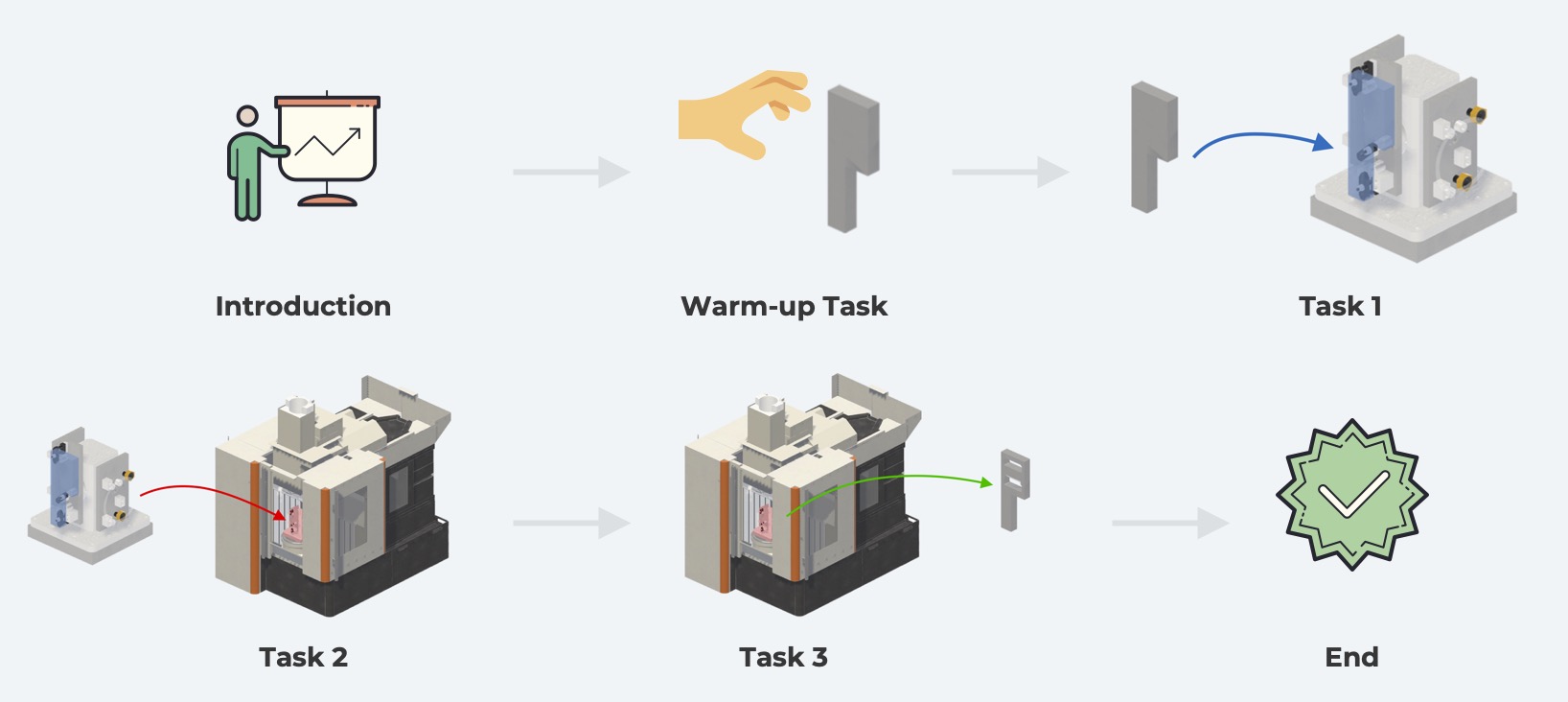

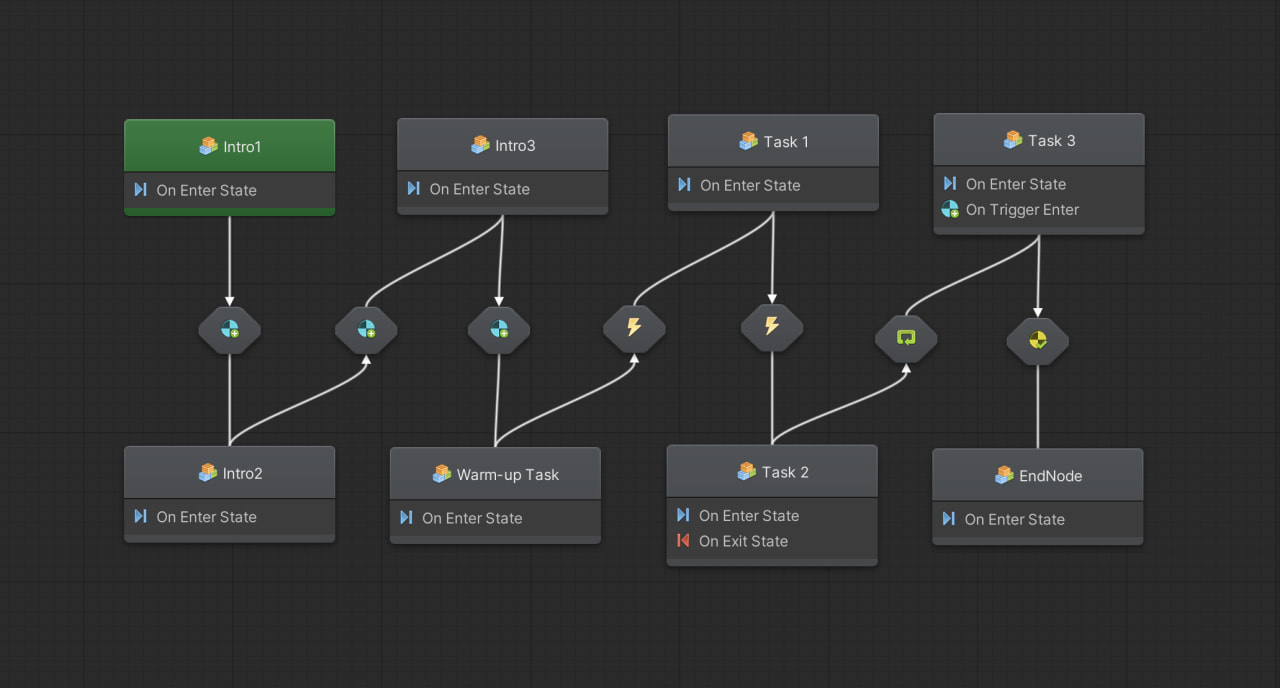

First, we need a test flow of the manufacturing activities, therefore we can decide which models are interactive. as you see here, we have an introduction activity (to introduce the VR scenario), and a warm-up task (let participants familiar with their VR hand), and then followed with task 1 ( place the metal component to the pallet), Task 2 (place the pallet to the machining center), Task 3 (remove the finished component), and finally, an end activity.

With the test flow defined, we can start scripting to make the model interactive. In the thesis, I use Bolt to program the logic. Bolt is a free visual programming tool for unity. As you can see in the picture, I separate the introduction activities into 3 parts and then followed with the warmup task and three manufacturing tasks. After this step, the interactive VR scene is finished.

Create Digital Human Model

Which tools were used to create the two types of human models?

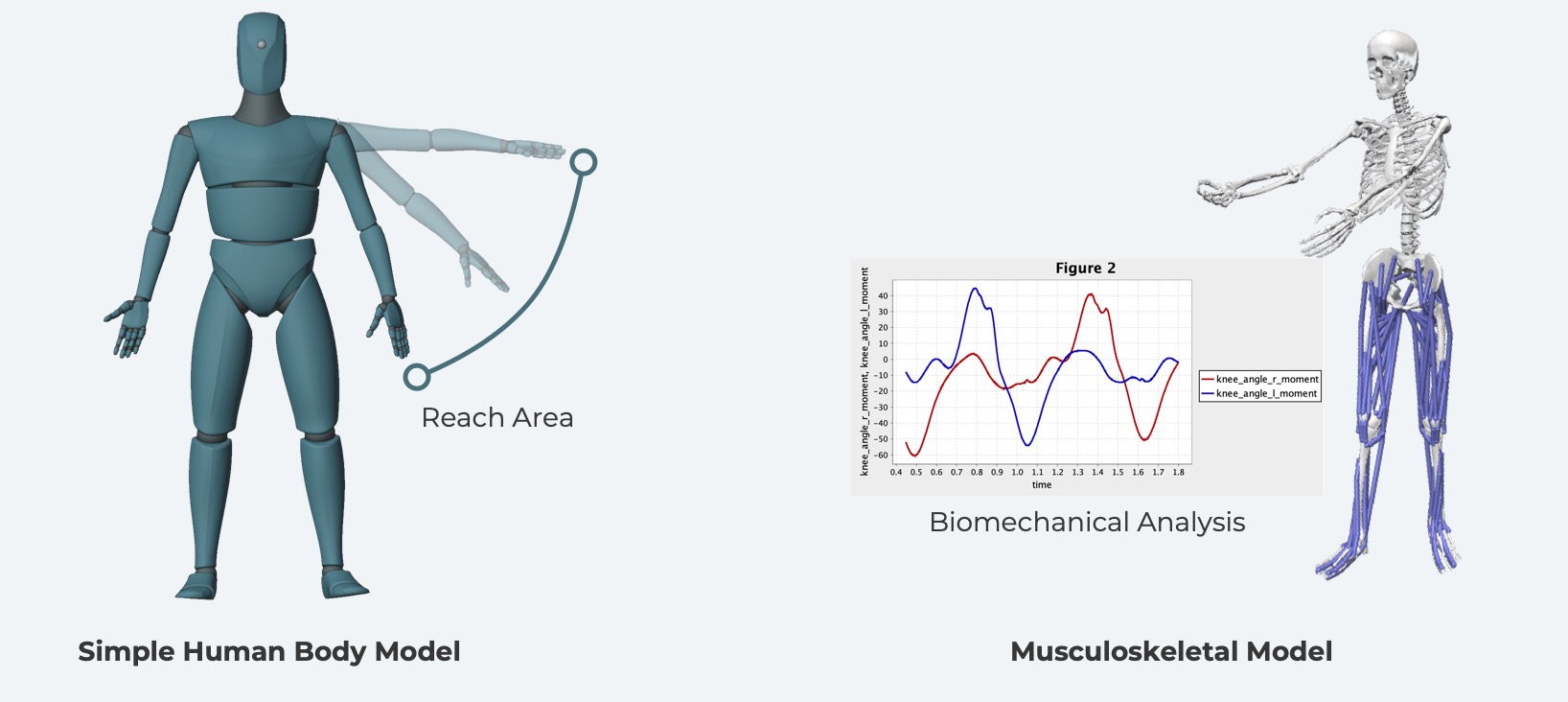

But, before creating a digital human model, we need to understand the two types of human models. The first is a simple human body model, this model can be used for simple ergonomic analyses like the reach area, visible area. Another type of model is the complex musculoskeletal model, this model can be used for detailed biomechanical analyses, like joint reaction, muscle analysis.

Well, Blender is a great tool for creating the simple human body model. It is free open-source software. as you can see, A template human body model was created with blender, the mode support: fbx, glb, glTF export, can be used in Unity.

For the complex human model, Opensim can be used to model the human muscular system. OpenSim is a free open-source software that lets users develop musculoskeletal structures and create dynamic simulations of movement. The OpenSim team has created many template models in its library. And I selected a full-body musculoskeletal model from it.

How to use template models to represent a huge variety of body sizes of the workers?

Now we have the template human models, How to use the template models to represent a huge variety of body sizes of the workers?

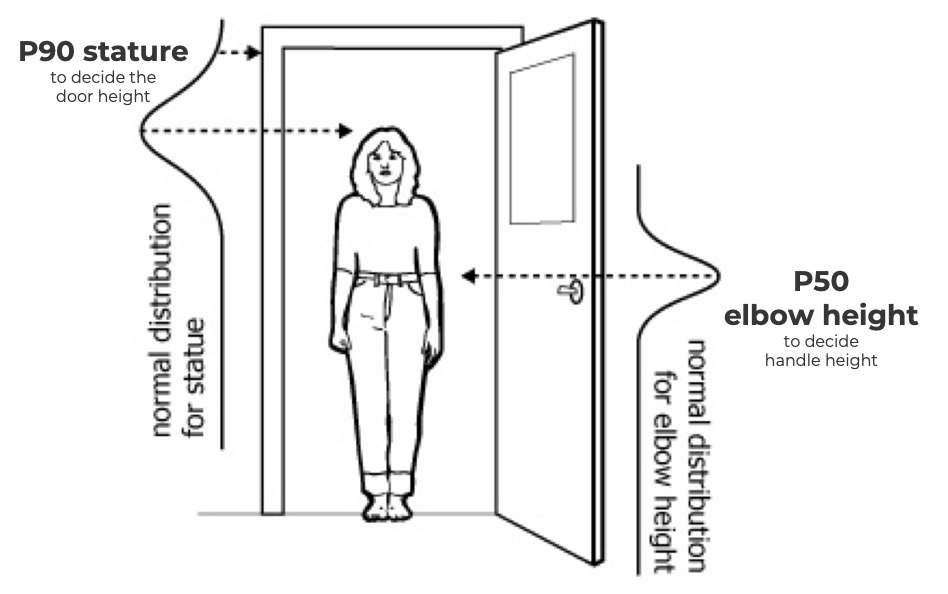

We need to understand the concept of anthropometry, it is the science to measure human individuals in a systematic way, It has been widely adopted in the fields of Industrial design, Architecture, and Ergonomics. The anthropometric data is usually presented in percentile. Take a look at the image. for instance, we get height data of a group of the population, we can use P95 height to decide the door height because the short people (P5) will be able to pass the door even the door is very high.

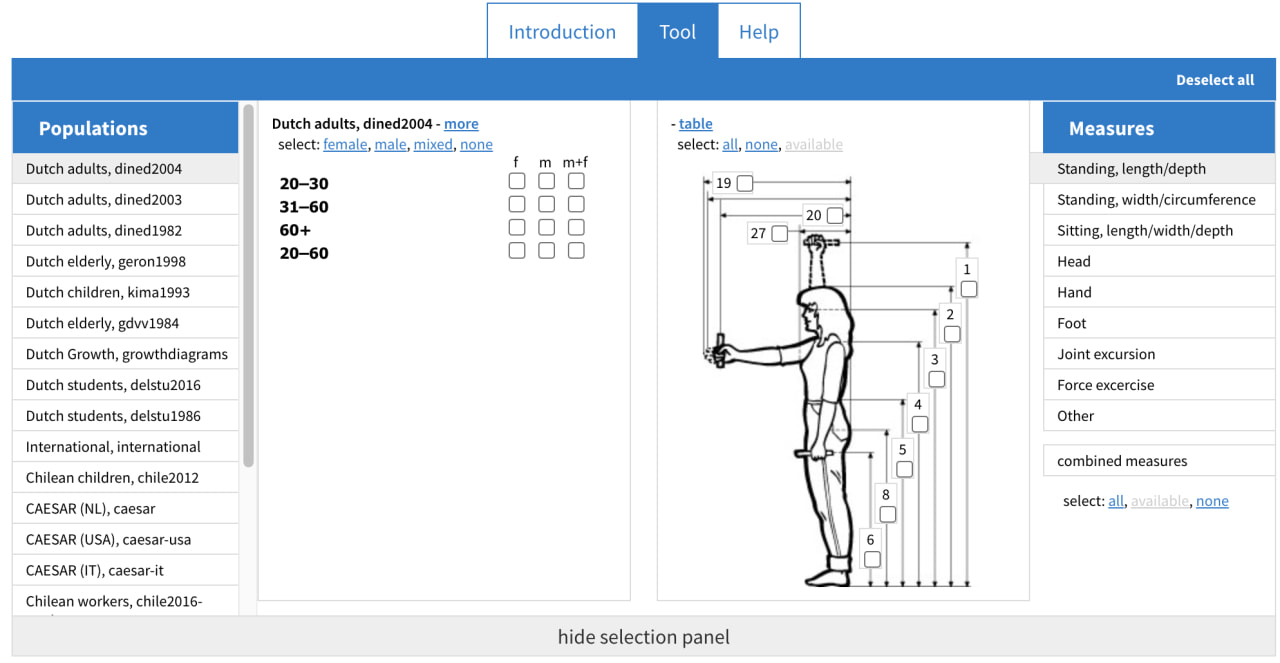

So where to get the anthropometric data? We can use the anthropometric database. For example, Dined is an anthropometric toolkit developed by TU Delft with many population data like Dutch, American, Italian, and so on.

How to use anthropometric data to change the dimension of a human model?

From desk research, there are the following approaches that can support anthropometric modeling. For the simple human body model, we can do manually parameterized modeling, we can generate a model from a 3D anthropometric database, we can use an algorithm to reconstruct the human body. But for the musculoskeletal model, we can only scale the model in Opensim with a marker file.

Let’s see how it works. For the simple model, first, we can collect the anthropometric data from the database, and paste it into the excel toolkit developed for the thesis, the toolkit will show the human body dimension in a graphical way, and finally, we can change each body segment manually.

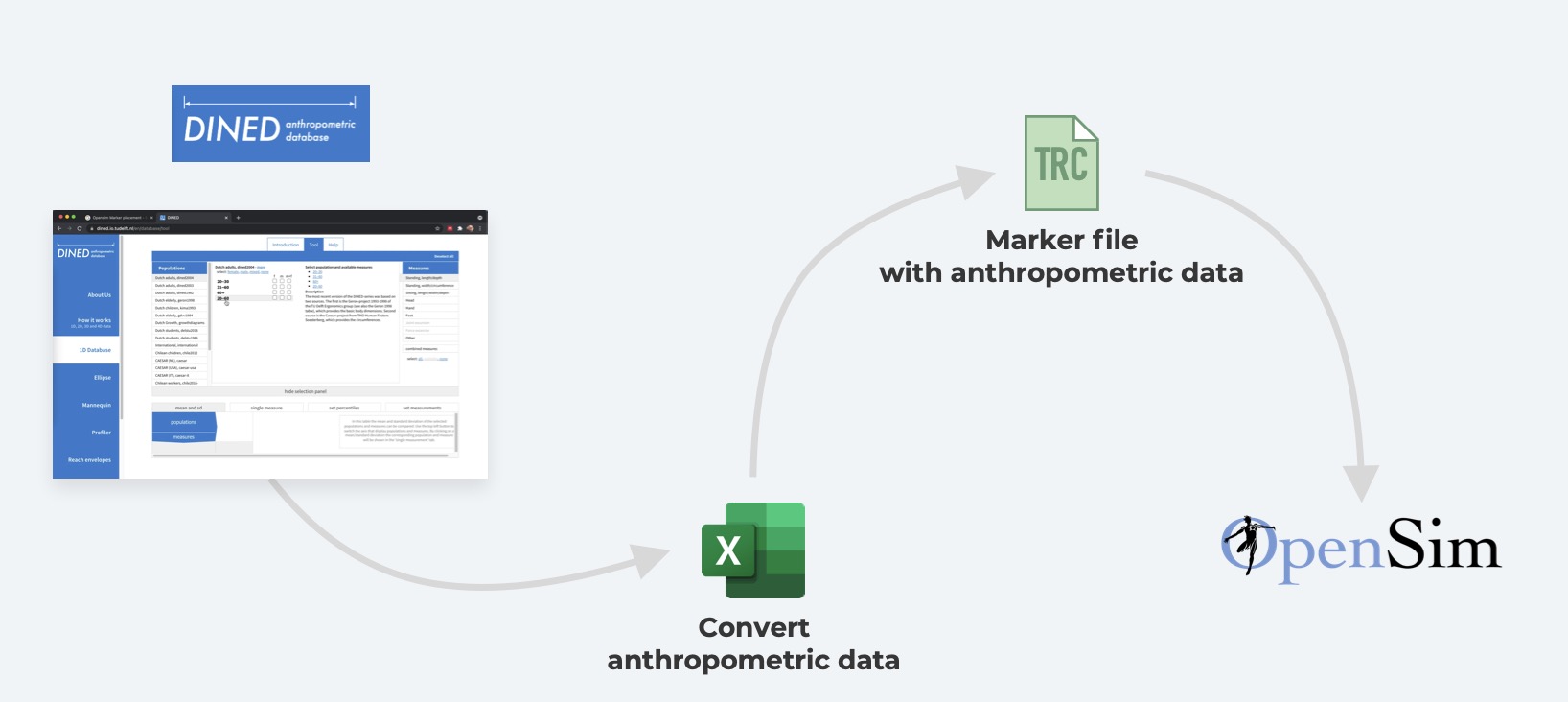

For scaling the musculoskeletal model, still, we need to collect the anthropometry data first, then we have to convert those data to a marker file that OpenSim will recognize. When we load the marker file in OpenSim. and the musculoskeletal model with be modified automatically.

Simulate Digital Human Movements

Which is the “best” method for simulating digital human movements?

There are many approaches to simulate digital human movement, like Keyframing, Procedural Animation, Motion Capture, Forward, and Inverse Kinematics. But only motion capture can bring the most realistic human movements. Therefore, Opensim used motion capture to generate human posture.

Since we move to motion capture, which sensor we should use. Kinect is a cheap solution to monitoring workers’ movements. Kinect Sensor is a motion-sensing device developed by Microsoft, it is very cheap compared to the rest of motion tracking devices.

How to simulate digital human movements with Kinect?

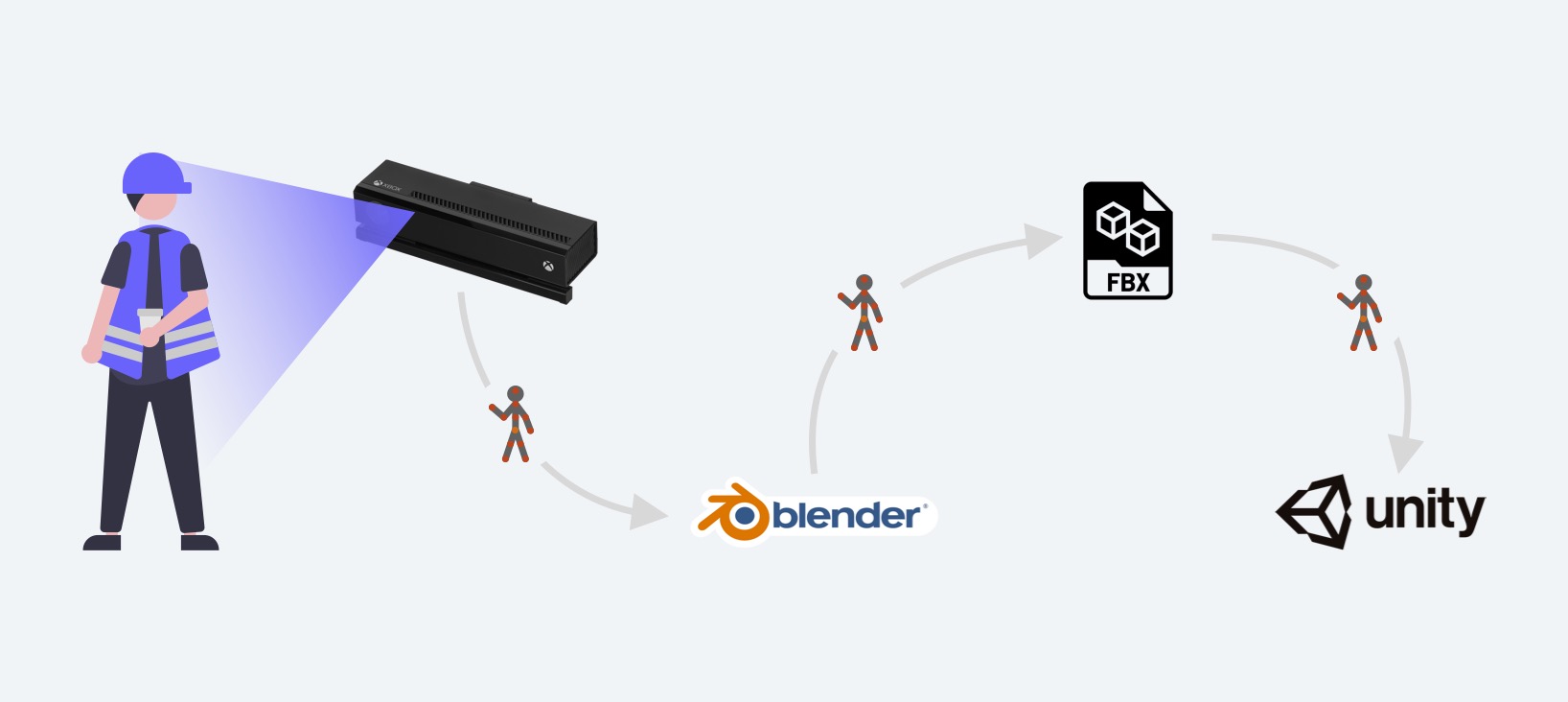

Eventually, we want to visualize the human movement in Unity. We can use Kinect to get raw motion data and import the data to Blender. Blender will convert those data and save them in an FBX file, and finally, we can import the FBX file to Unity3D and visualize the movements. The drawback of this approach is, we can not see the human movement in real-time.

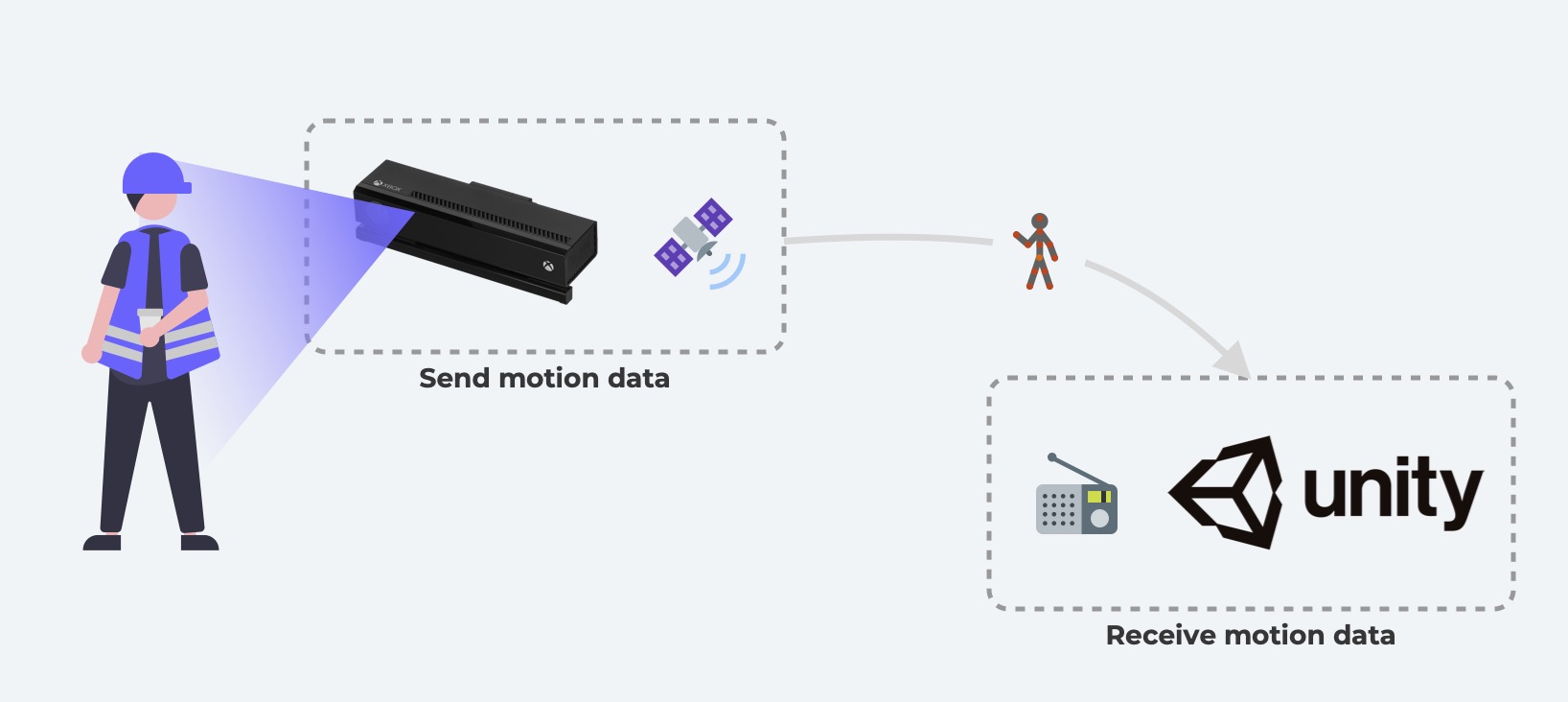

To achieve a real-time visualization of the human movements. we can send the motion data directly to Unity. We can create a Kinect server to board casting the motion data, and built a client on Unity to receive the motion data and visualize it.

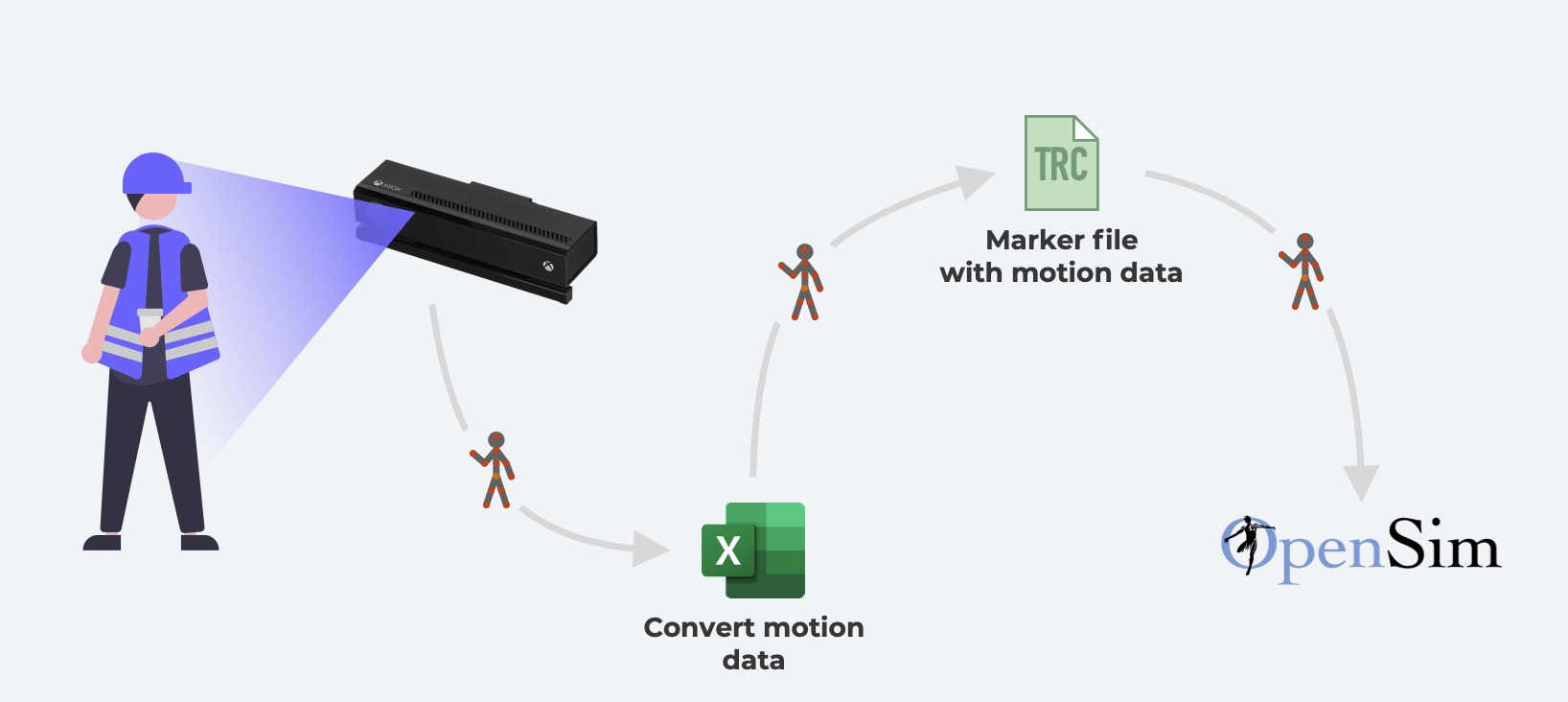

To simulate digital human movements for the musculoskeletal model in Opensim, the process is a bit different. We need to use a toolkit to convert the Kinect motion data to a marker file which Opensim supported. Then OpenSim will recognize and reconstruct the human movements.

Pilot Test

Wrap up everything and let’s have a test!

Before the test, we need to prepare a test flow. Let’s review again the test flow. The introduction, warm-up task, followed by 3 manufacturing tasks. In task 1, the worker place the metal component in the pallet, task 2 the worker will install the pallet to the machining center, task 3, the machining center will start manufacturing, the last step, the worker need to remove the finished component.

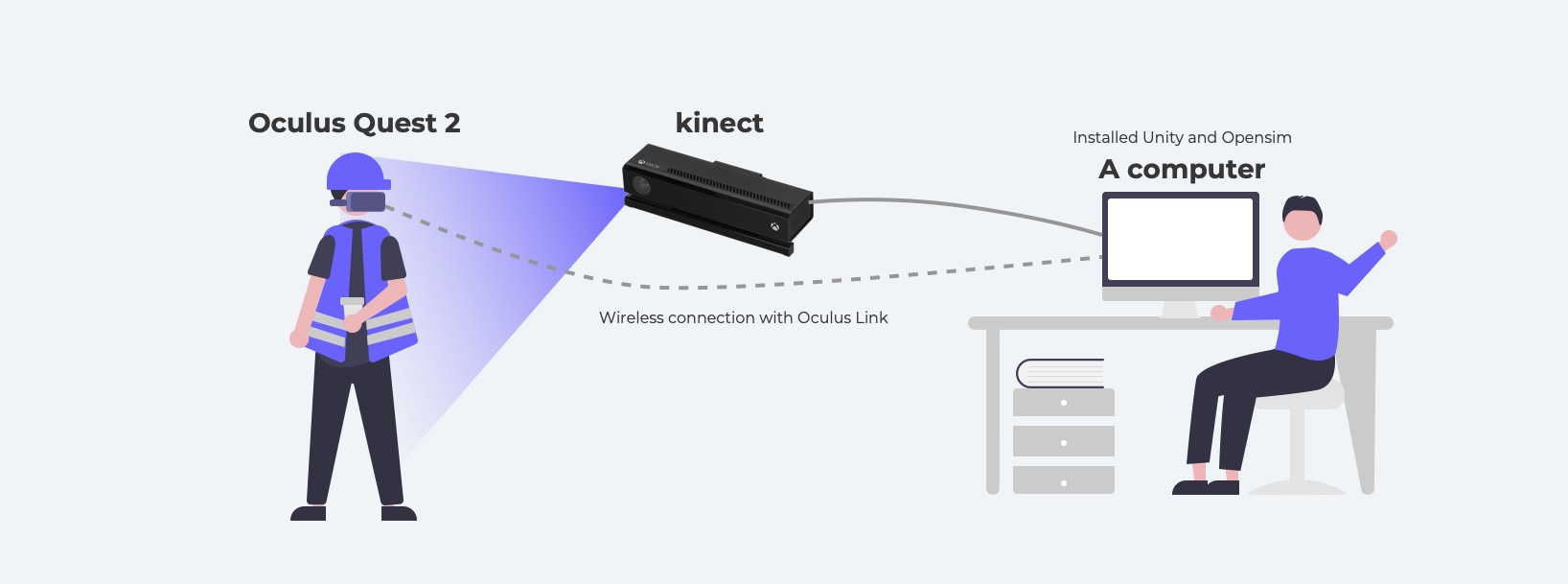

And also need to prepare the hardware and test place, The hardware required an Oculus Quest 2 device, a Kinect, and a computer with the VR scene and Opensim installed. Since the actual test scene was conducted in VR, there were no excessive requirements for the physical location, a large room with good lighting is enough.

And here is the pilot test video. As you can see, the left side is the first view of the VR environment, the right up corner is the Kinect view with the skeleton attached to the subject, and the right down corner is the human movement reconstructed inside OpenSim.

The user can subjectively evaluate the ergonomic factors in the VR scene intuitively, and the researchers are able to analyze the biomechanics factors in Opensim later on.

From the participant’s comments: the scene is very realistic and there was no discomfort during the test. But he felt the grab gesture is odd, not very intuitive. Also, he feels dangerous of these manufacturing activities if this was in real life. At last, he mentioned that he wish to see the full body in VR.

Look at the entire work from “God View”

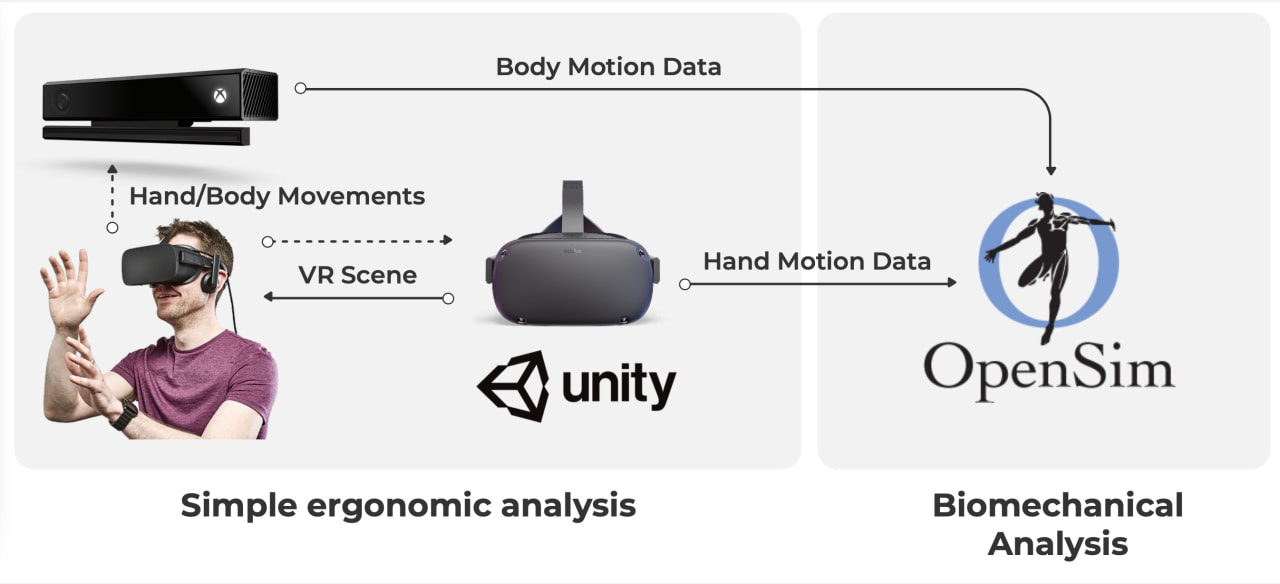

Here is a summary of the previous video, as you can see there are two types of analyses were performed, The simple ergonomic analyses were performed in unity, a complex biomechanical analysis was performed in OpenSim.

In the simple analyses we can see a VR headset in the center, the headset delivered the VR scene to the participants, and then the participants interact with the VR scene. While his hand and body movements were captured by Kinect and Oculus Quest, then the captured body and hand motion data were delivered to OpenSim. Eventually, these data can be used in OpenSim for biomechanical analysis.

Future Works

Improve the pickup gesture

The pickup gesture is not intuitive to the user, so it is necessary to improve the hand grasp effect. The video shows the latest improvements.

Embed simple human body model in first view

Integrating the digital human body into VR scenes can significantly improve the immersion experience for the user, the current version only has the hand visible.

Integration of a manufacturing cobot

The virtual environment currently created does not include collaborative robots. Other students will continue work in the scene to add a UR5E Cobot.