My Contribution

My personal contribution to this project is mainly focused on: researching works about HMI design, designing the HMI Visuals, prototyping the interactive HMI in Unity3D (Packed as a Unity package), and integrating the HMI into the team Unity project.

“TRUST IS THE MAJOR BARRIER”

As predicted by several sources in the next decade (or in the worst case scenario before the year 2050) the numbers of vehicles with higher and higher levels of automations on ours roads will increase consistently, leading to better road safety, new types of services and urban transformations .

But all these transformations are hindered by several problems, one of the major barriers to the adoption of this technology is the approval from the general public, and more specifically the trust in the technology itself.

Immersive, Interactable, Testable

The aim of this prototype is the creation of an immersive virtual scenario supported with modern technologies.

The users can find themselves inside an SAE level 5 car, and can interact with all the car components.

The far aim and development of this simulation is the creation of a series of tests to assess the trust factors in the scenario.

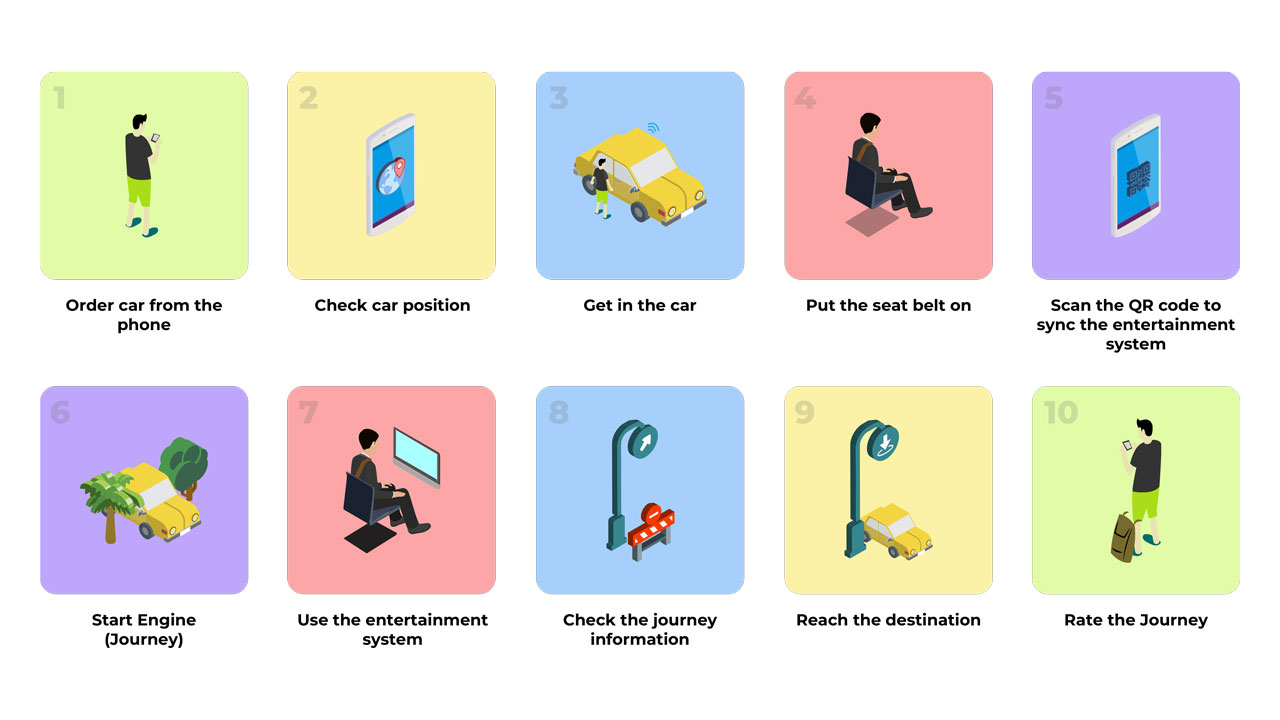

Autonomous Vehicles Services in Nagoya, Japan” and “Mobility-as-a-Service and changes in travel preferences and travel behaviour” guided us in the creation of a scenario in which the car is not owned by the user but is part of a TaaS (Transportation as a Service), where the users can “call” the autonomous car (with a smartphone application or digital totem) to pick them up for a ride, thus following the recent trend of “servitization” in the automotive industries.

Interface Design

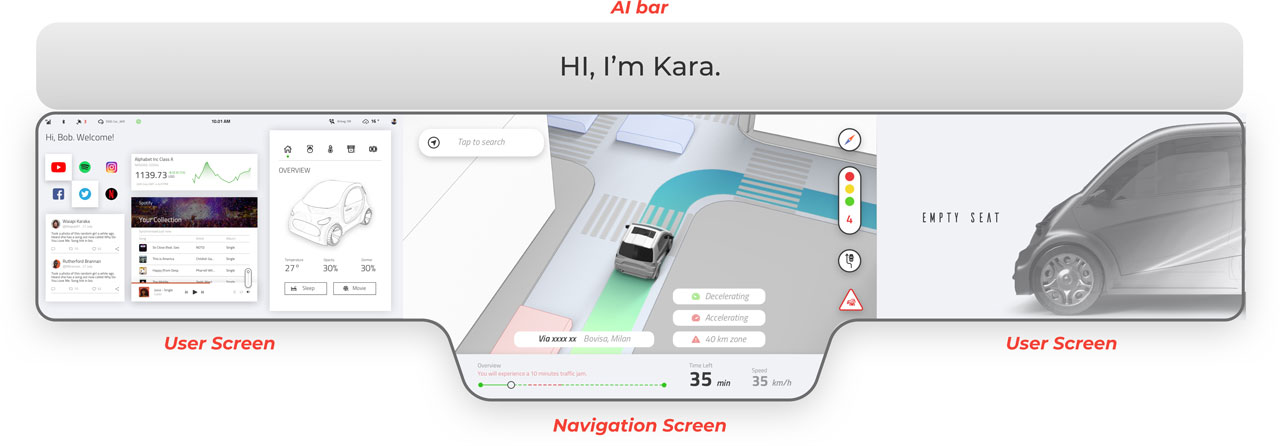

The visual interface extends over three types of screen devices: a central dashboard with touch screen capabilities (containing two user screens and the navigation screen), a dedicated glass display called AI bar and the windshield screen. In the scientific research (in progress), the AI bar will be removed.

User Screen

This screen is meant to be personal and can be customised with other third-party services (ex. Youtube, Spotify, Twitter etc.) by connecting each personal account to the car dashboard.

It also contains all the information that go beyond the monitoring of the driving, allowing the user to have a complete control over the car’s interior environment (panel on the right)

Navigation Screen

This screen contains all the driving-related information, showing a map with a stylised representation of the car and its surroundings. On the bottom some fixed information (speed, time left, trip progress) can be easily read, as well as possible problems along the road. On the right side, the overtake notification, the traffic light representations as well as some standard road signals are displayed only when needed.

AI Assistant

The information displayed on the AI bar are only textual, but jointly and complementary with the AI voice, they will represent the main communication channel with the users.

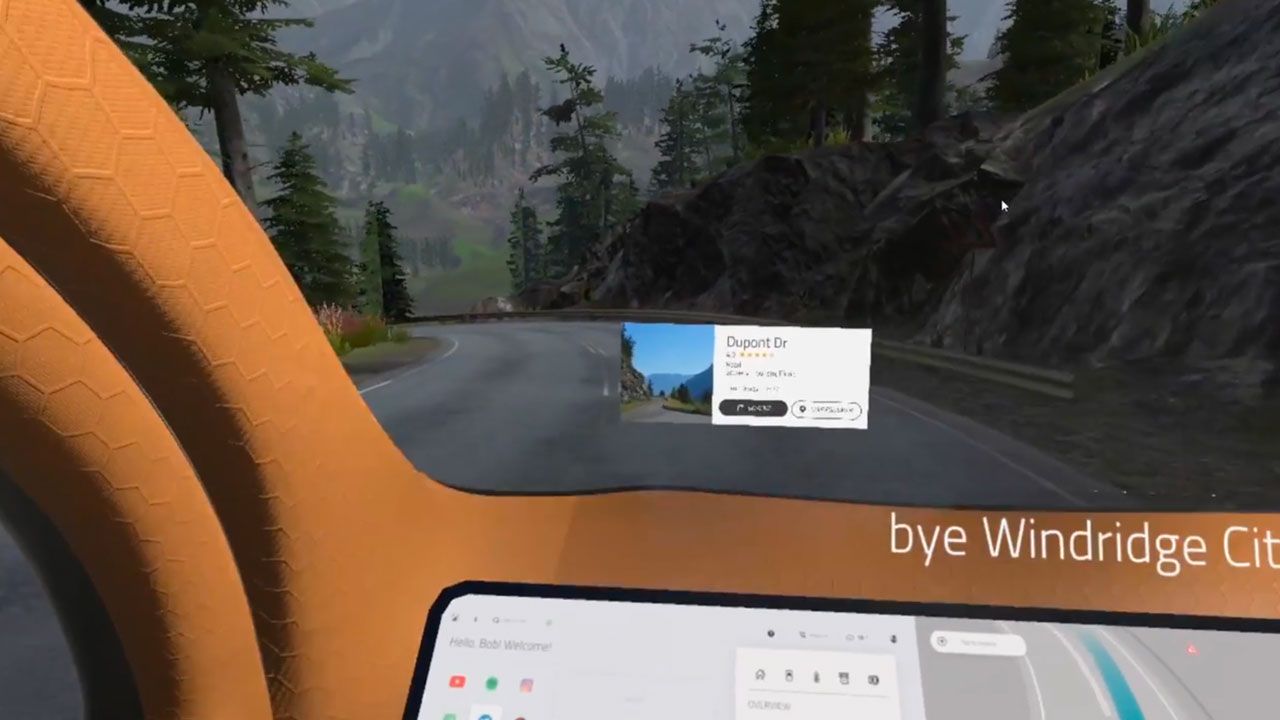

Windshield Display

The windshield display serves as a complementary display to the navigation screen, notifying the user about location-based information such as environmental sight-seeings and advertisements from commercial activities.

Technology Reference, Prototyping tools and Future research.

AI Bar Glass Material: similar to LG Transparent OLED Signage

Windshield Display Material: the Continental Automotive – Augmented-Reality HUD or the RICOH Automotive HUD

Darken / Brighten Glass: similar to Halio™, AGC’s smart-tinting glass

The main core of the simulation is Unity game engine, an HTC Vive Pro head mounted display, and the Leap Motion sensor. A series of different human body parameters can be observed, such as: skin conductivity, heart rate response, eye tracking, and interrogate (with surveys and interviews).